Matlab documentation for the feature: http://www.mathworks.com/help/techdoc/ref/varargin.html

Description: This allows you to specify a variable amount of arguments as input.

How this helped me:

A major part of our development process was working on parts of the pipeline as scripts to get functionality to work. In this phase, we set default values to load for many things.

After we got basic functionality, this became a slight problem as we had to turn the scripts into functions to piece together all the code.

By specifying variable number of arguments, we were able to keep the script functionality for quick testing while still having the ability to piece things together.

The most useful place this happened was in getDataMat.m

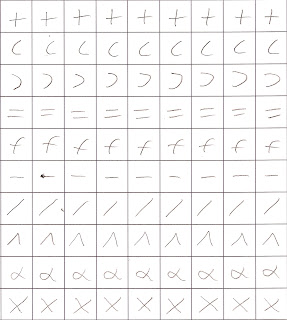

This function takes one of our dataset images, reads in each cell that contains a symbol, and outputs a matrix with of the data and the corresponding class descriptions.

|

| getDataMat turns each of these symbols into a matrix |

By using varargin, I was able to make it have 3 modes of operation:

1) The original script functionality

2) Ability to specify a directory, then all the *.jpg images from the directory will be grabbed.

EX:[x y] = getDataMat('directory', ''images/logic');

3) Ability to specify a variable number of file paths to read in:

EX: [x y] = getDataMat('images/logic/exist_1.jpg','images/logic/forAll_1.jpg');

[x y] = getDataMat('images/logic/forAll_1.jpg');

HOWTO use/Screenshots of relevant code: (I hope this doesn't contain bugs!!)

To use it, just include varargin as a parameter.

EX: function [data_x data_y] = getDataMat(varargin)

Then do:

nVarargs = length(varargin);

and have an if statement that changes the functionality based on the number of arguments.

Note: varargin is a cell array, so you will have to index it like so: varargin{i}

This can cause some tricky type errors, so be careful!!

|

| Example usage! |

By using this feature, tedious coding to play with different subsets of the data was eliminated! I hope it helps you too!

Useful links to help use this feature:

http://blogs.mathworks.com/pick/2008/01/08/advanced-matlab-varargin-and-nargin-variable-inputs-to-a-function/

http://makarandtapaswi.wordpress.com/2009/07/15/varargin-matlab/